Machine learning models are powerful tools that can bring to light and generate insights from your data such as patterns and predictions in customer behavior real-time analytics and scoring, and sentiment analytics.

The Syntasa platform simplifies and optimizes the end-to-end governance of your models. The platform provides the tools for ingesting and transforming your data; developing and training your models, including version management; and operationalizing your models to provide scoring, business KPI monitoring, and model drift alerting.

Model development and experimentation

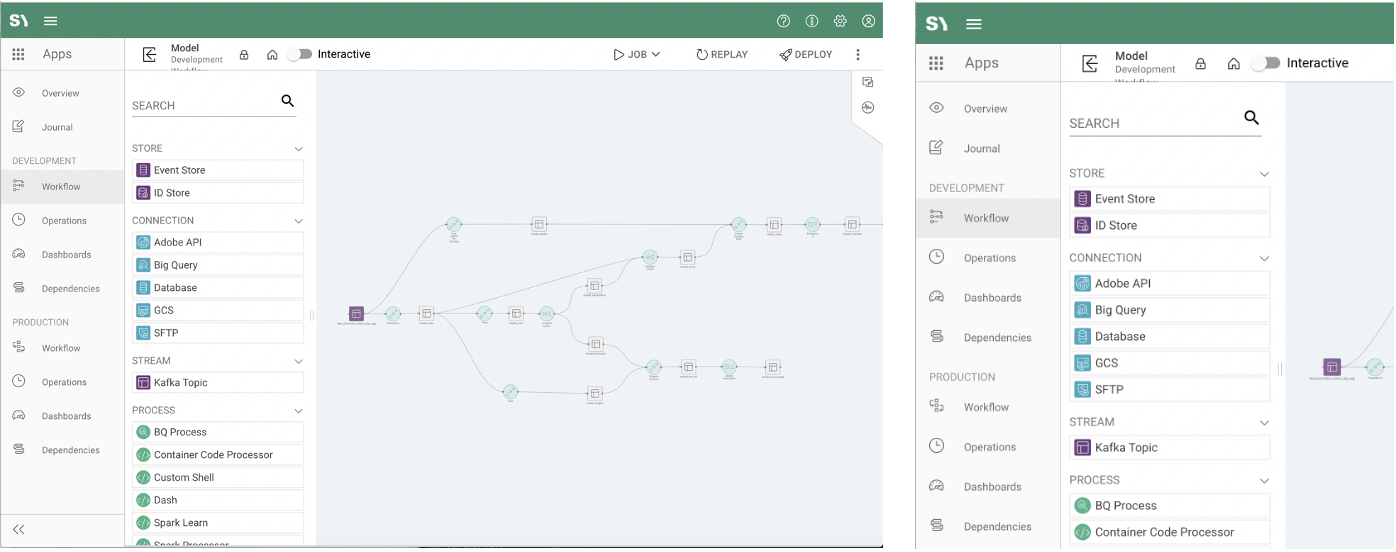

An app in the Syntasa platform encapsulates a logical segment of work that accomplishes, for example, the ingestion and transformation of data, the application of a model, or the activation of the results of the models. The flexibility of an app empowers you to envelop the example work segments into individual apps or all segments into a single app.

In addition to building and encapsulating a model's logic, parameters, sample data, etc., an app incorporates features needed to govern and maintain your models such as:

Development and experimentation

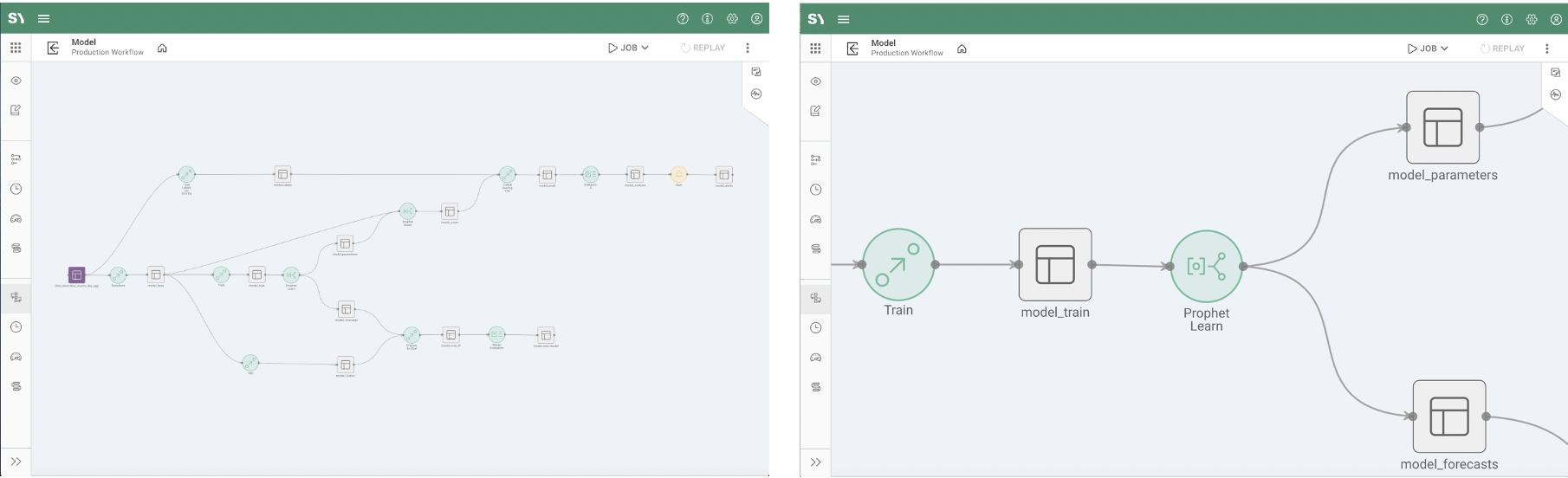

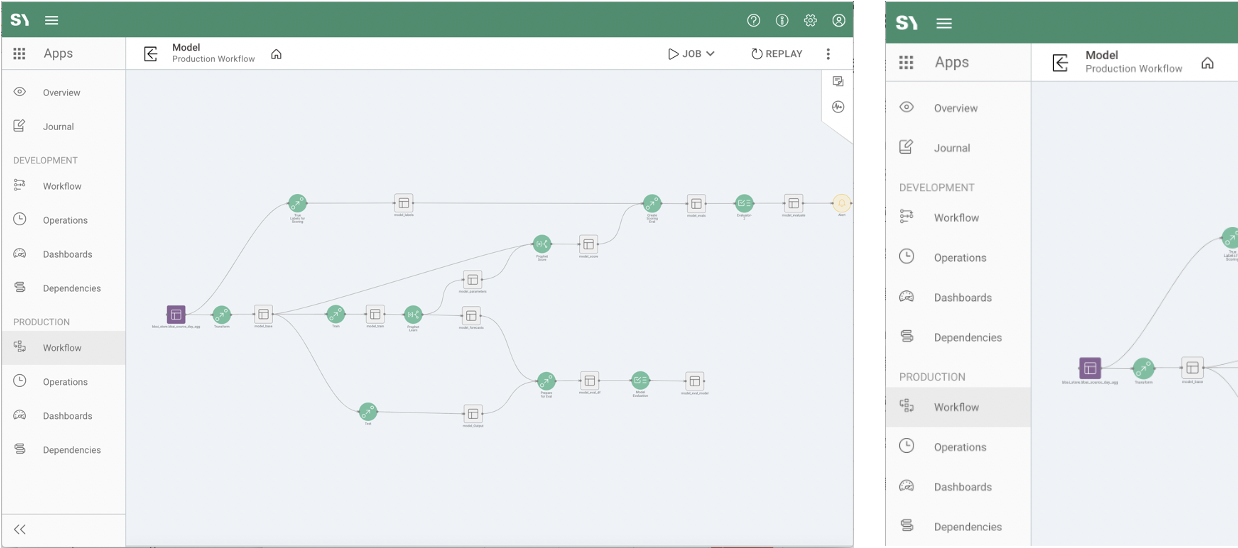

An app has the concept of development and production environments. The app workflow in the production environment cannot be edited, aside from creating and scheduling jobs. In the development environment, the app workflow and models are created, tested, and updated without concern of disrupting the production deployed app or data.

Apps can utilize various machine learning models that are built into the Syntasa platform as processes, build their own user-defined process that other users can use as a no-code process, or, in the future, utilize the platform's integration with MLflow to tap into models housed in the Model Registry.

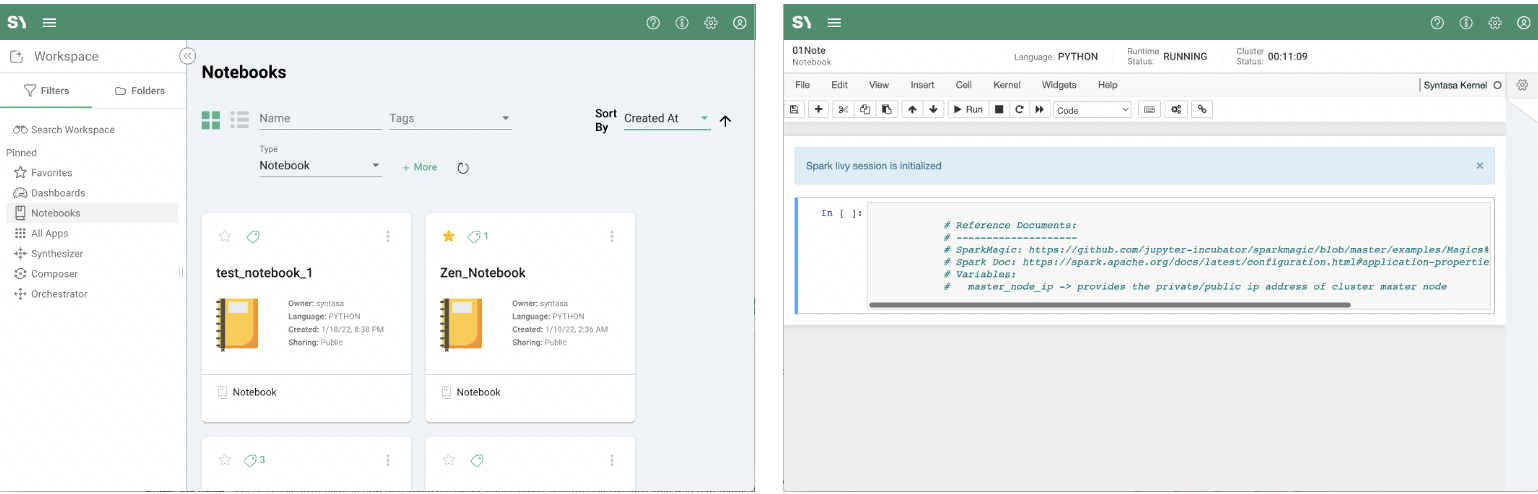

Also, independent of apps, but within the Syntasa platform, users may create and experiment with a model from within a notebook before bringing the model into an app. Syntasa notebooks utilize a tailored version of JupyterHub, bringing together Jupyter notebook functionality with cloud environment resources managed within the Syntasa platform, to provide shareable autoscaling Spark cluster notebooks supporting Python and Scala languages.

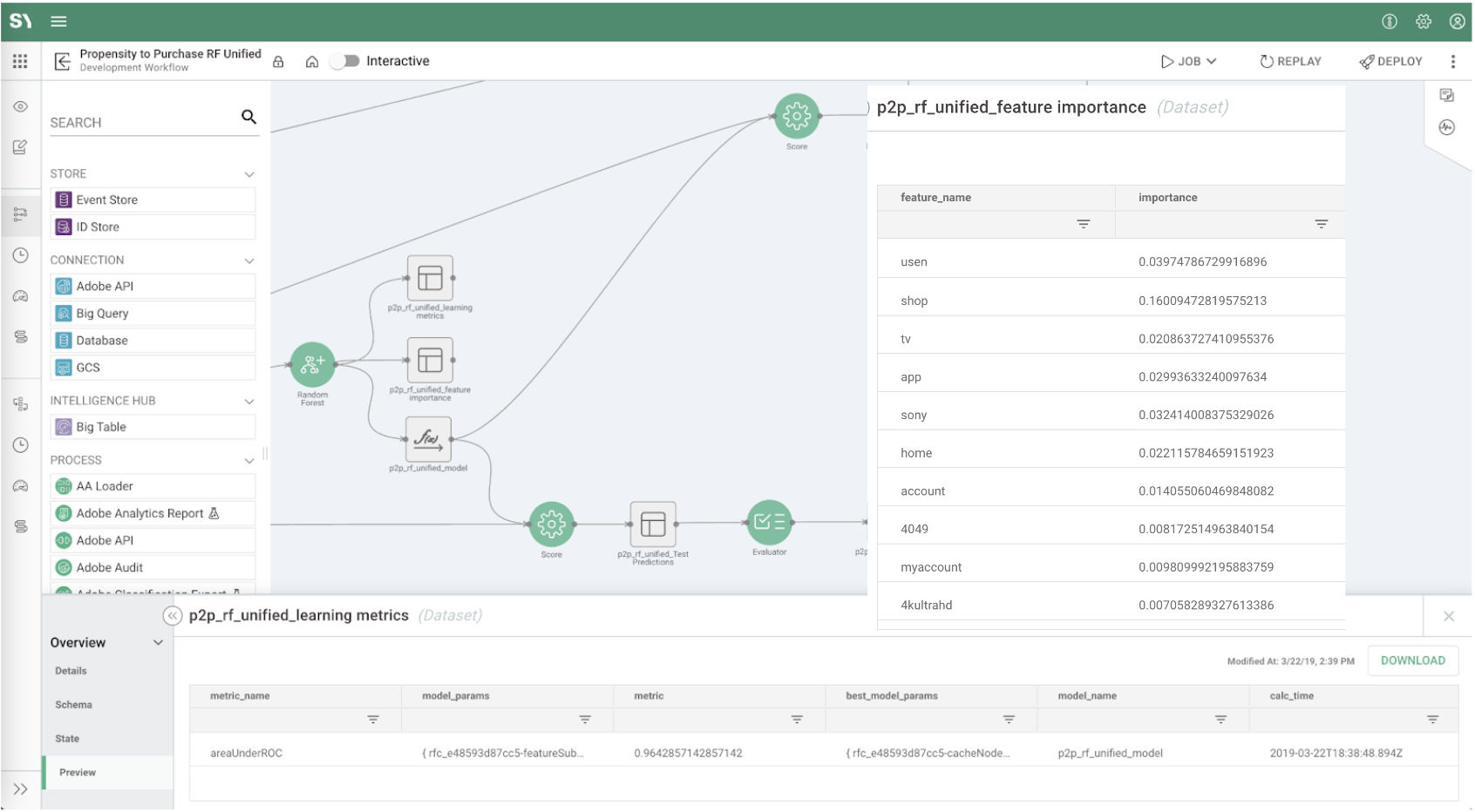

Viewing and analyzing test results

The developed model will consist of one or more processes within the Syntasa app. The processes can be run within user-created jobs inside the app. When creating a job you can select all processes or just select one or a few depending on your focus of experimentation.

The results of the models and other processes will be output directly into tables. The tables can be seen on the app workflow canvas and their contents can be reviewed directly from the Syntasa user interface.

The app and job can also utilize the Syntasa platform's integration with MLflow Tracking to capture the test results as a run within an experiment. Test results of successive runs can be captured in MLflow under the same experiment and then be viewed and compared.

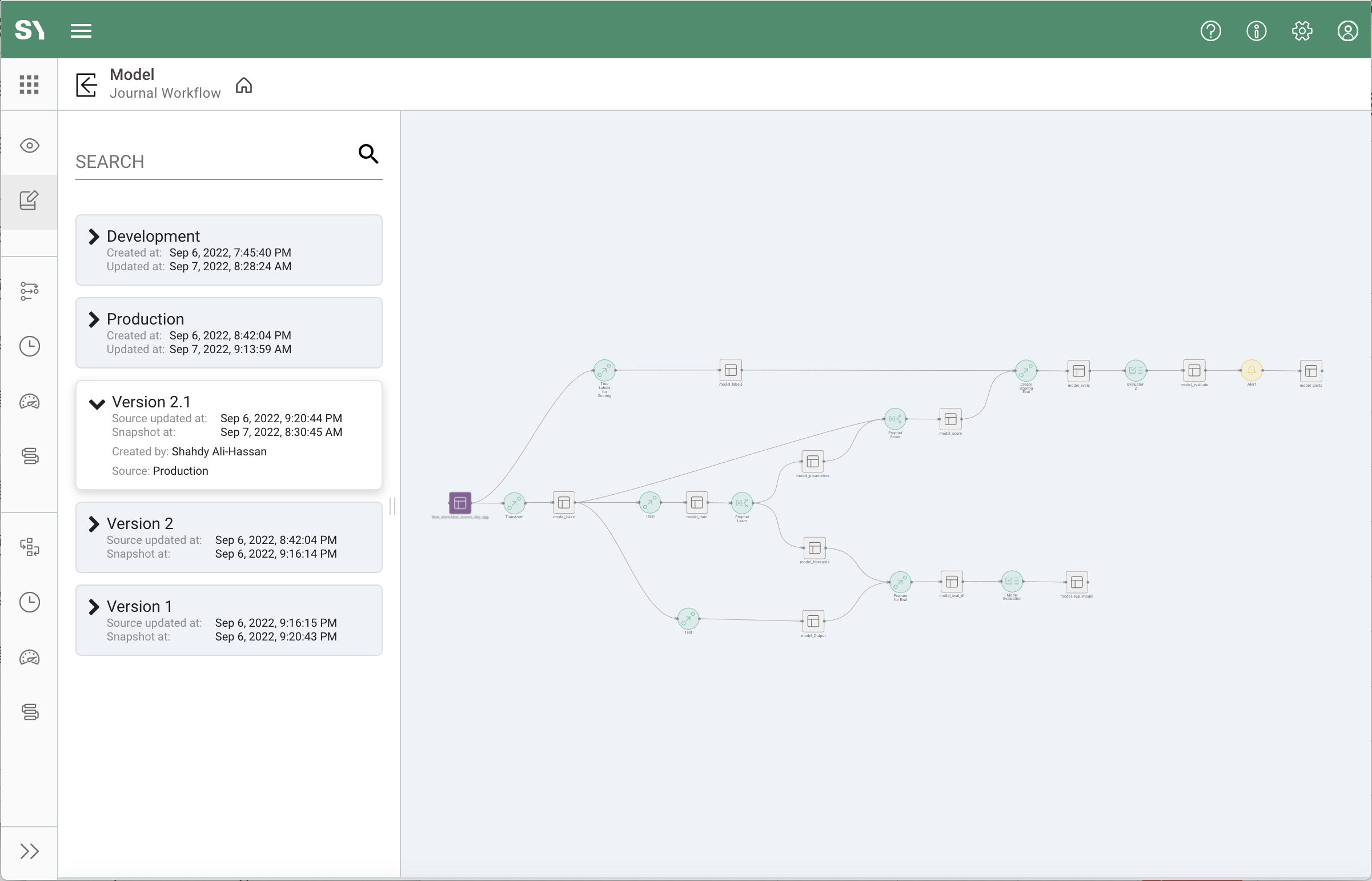

Version control

The Syntasa application captures and manages versions of the apps via snapshots. Snapshots of an app are captured automatically every time the app's development environment is deployed to production, but also at the user's behest at any time.

While developing and experimenting with your model and pipeline, you may take a snapshot of version 1, alter the configurations and/or logic, and snapshot that as version 2.

The full list of snapshots can be viewed from the app's Journal menu where the details of each version can be reviewed. Any version can be loaded into the development environment to overwrite the current development version.

Deployment

Once your model's development, experimentation, and testing in the Syntasa app's development environment meet your satisfaction, it can then be deployed to the production environment. Any further needed updates to the app must be made in the development environment and then deployed to the production environment.

The production app will match the development version that was deployed in terms of configuration and code changes of the model and pipeline, but the app and data are separated into their own production store.

In addition to the separation of development and production, allowing worry-free development and experimentation, an app incorporates features needed to audit and secure your models such as:

One-click deployment

Deployment is an easy single click, but the application will perform some validations before concluding the operation. Also, before deploying to production, a snapshot of the current production will be taken. This snapshot provides an audit of who and when the deployment was performed and the ability to roll back to the previous version if needed.

Security and permissions

The Syntasa apps, and other objects such as dashboards, notebooks, folders, etc., have a sharing setting. This setting dictates who can see and access your object. The setting is initially set when creating your app, but it can be changed by the owner or a system administrator at any time.

During the development and experimentation of your model and app, you may have set the permissions to private if you wanted it hidden from other users as you were developing it, but once ready for deployment to production change the access to public or certain user groups within the Syntasa application.

The following sharing options are available on the app and other objects, but those users with system administrator rights can always see all objects:

- Only me (Private) - Only accessible to the owner of the object and system administrators

- Everyone (Public) - Accessible to all Syntasa application users

- Users in groups - Accessible to users who are members of the indicated user groups as well as system administrators

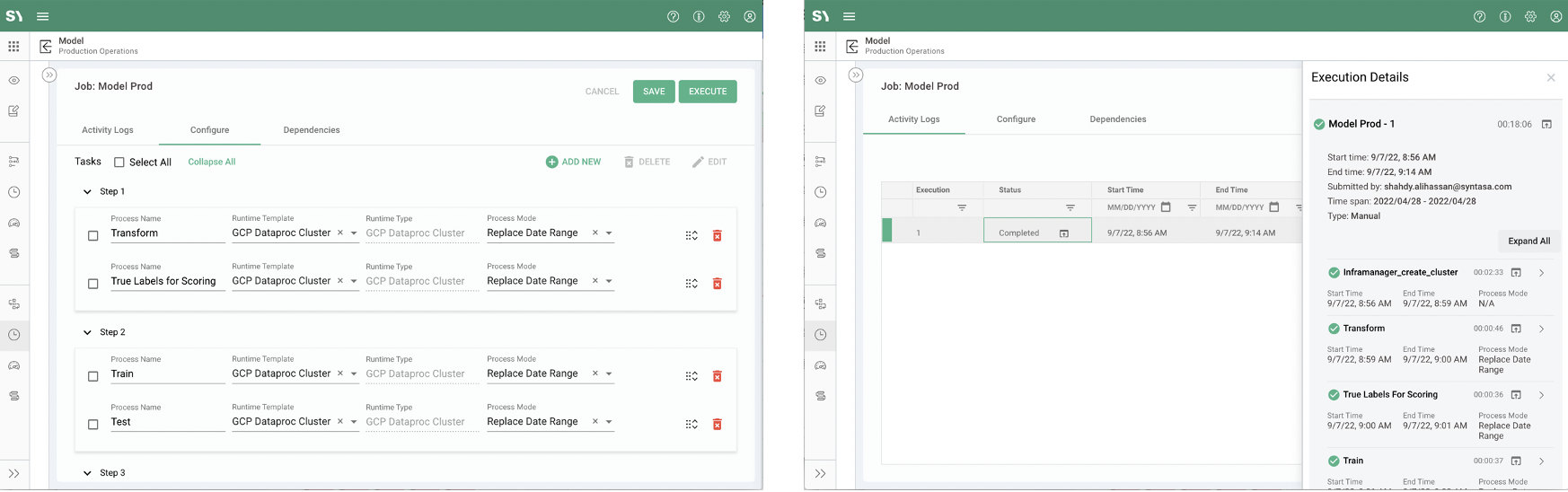

Operations

Now that your app has been deployed to production, there are several aspects of the app and the wider Syntasa application that can be used to realize the fully operational status of the app. Several of these include:

Job creation and monitoring

The jobs from the development environment are not copied to production as various testing jobs with criteria applicable to sample data would not be relevant in the production environment. Also, only the jobs created in the production environment are allowed to be scheduled. These can be time schedule based, triggered by an event such as an arrival of a file, or streaming.

After the app has been operationalized with a scheduled job, the job can be monitored for success or failure from within the app's Operations menu, the system-level Activity menu that reviews all jobs across all apps, and/or alerts can be configured to notify users of success and/or failure of the jobs.

Business KPI and model alerting

The status of jobs, business KPI measures, and model health and performance can all be reviewed and monitored from the Syntasa application, but, in addition, alerts for such conditions can be configured and notified to interested parties when an incident occurs.

Alerts can be created for a wide variety of scenarios, such as:

- App jobs and platform health - Users can be notified if and when a job fails, succeeds, or has not been completed by a certain time; when there is a job running longer than normal; a spike in platform cost, etc.

- Business and data KPIs - Alerts can be defined to notify users when certain business criteria are below or above prescribed thresholds; when data is missing or late for a certain period; or when certain measures are beyond the normal range based on the recent averages.

- Model health - Model drift monitoring can be configured within an alert to warn users of such occurrences. The model may need to be optimized or retrained.